A job is the execution of a process on one or multiple Robots. After creating a process, the next step is to execute it by creating a job.

Job assignation can be done manually from the Jobs page or in a preplanned manner, from the Triggers page.

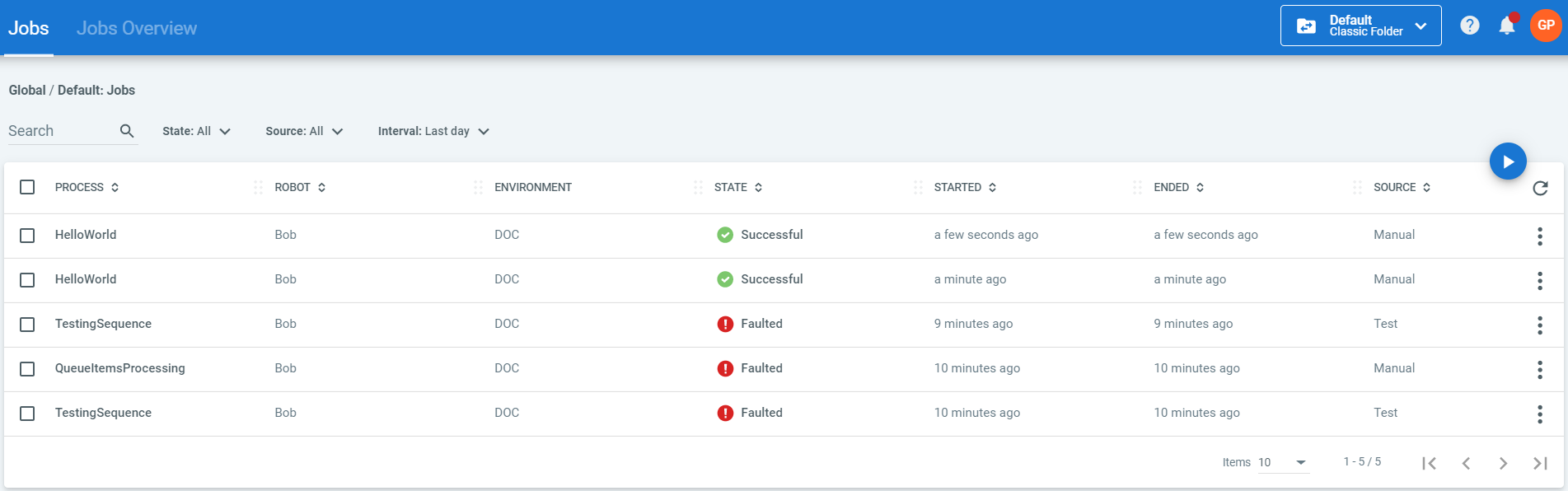

The Jobs page displays all the jobs that were executed, the ones still running, and the ones placed in a pending state, regardless of whether they were started manually or through a schedule. Jobs started on Attended Robots from their tray are also displayed here, with Agent displayed as source.

Important!

The Jobs page displays job information only from the currently selected folder.

On the Jobs page, you can manually start a job, assign it an input parameter (if configured) or display its output parameter. Additionally, you may Stop or Kill a job, and display the logs generated by it. You can also Restart a job that has already reached a final state, keeping its original settings or changing them. In the event you plan to troubleshoot faulted jobs, more details are available on the Job Details window. For unattended faulted jobs, troubleshooting can be achieved by recording the execution. Details here.

Note:

By default, any process can be edited while having associated running or pending jobs. Please take into account the following:

Running jobs associated to a modified process use the initial version of the process. The updated version is used for newly created jobs or at the next trigger of the same job.

Pending jobs associated to a modified process use the updated version.

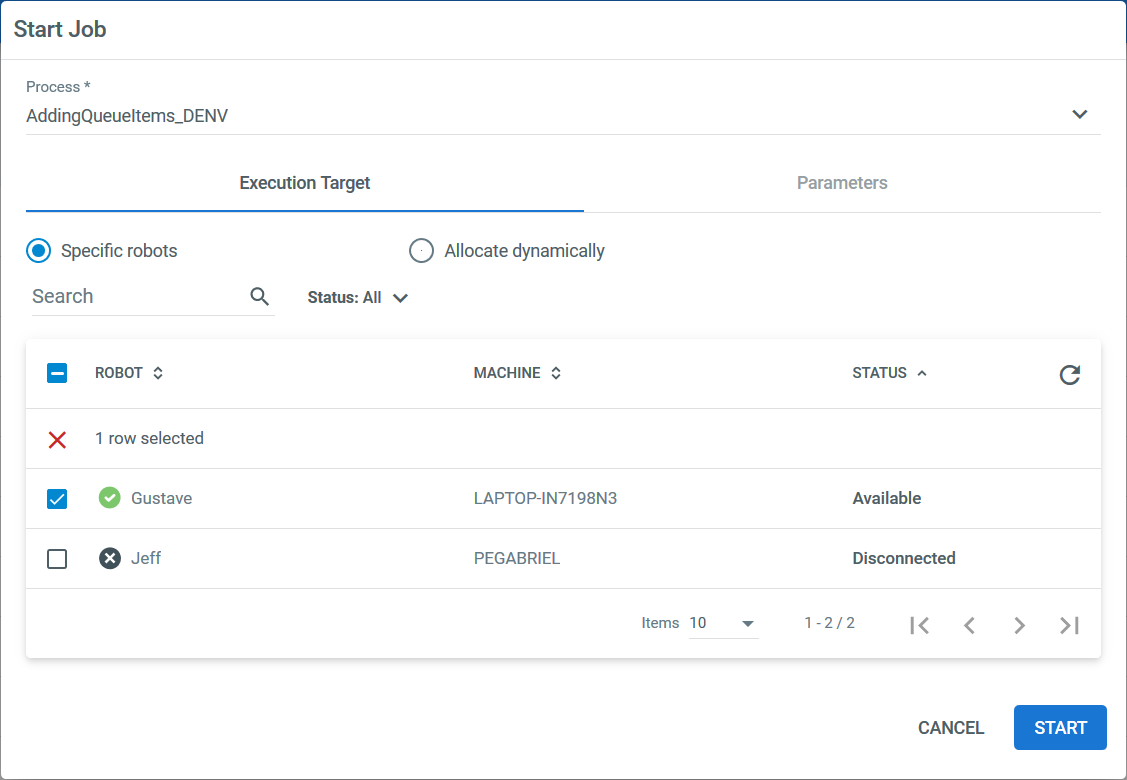

Execution Target

When creating a new job, you can assign it to specific Robots or you can allocate them dynamically.

The Specific Robots option enables you to execute a job on a certain robot only.

The Allocate Dynamically option enables you to execute the same process multiple times on whatever robot becomes available first. Jobs are placed in a pending state in the environment workload based on their creation time. As soon as a robot becomes available, it executes the next job in line.

If you define several jobs to run the same process multiple times, the jobs cumulate and are placed in the environment queue based on their creation time. They are executed chronologically whenever a Robot becomes available.

Note

Using the Allocate Dynamically option you can execute a process up to 10000 times in one job.

Important!

If a Robot goes offline while executing a job, when it comes back online, its execution is picked up from where it left off.

Execution Priority

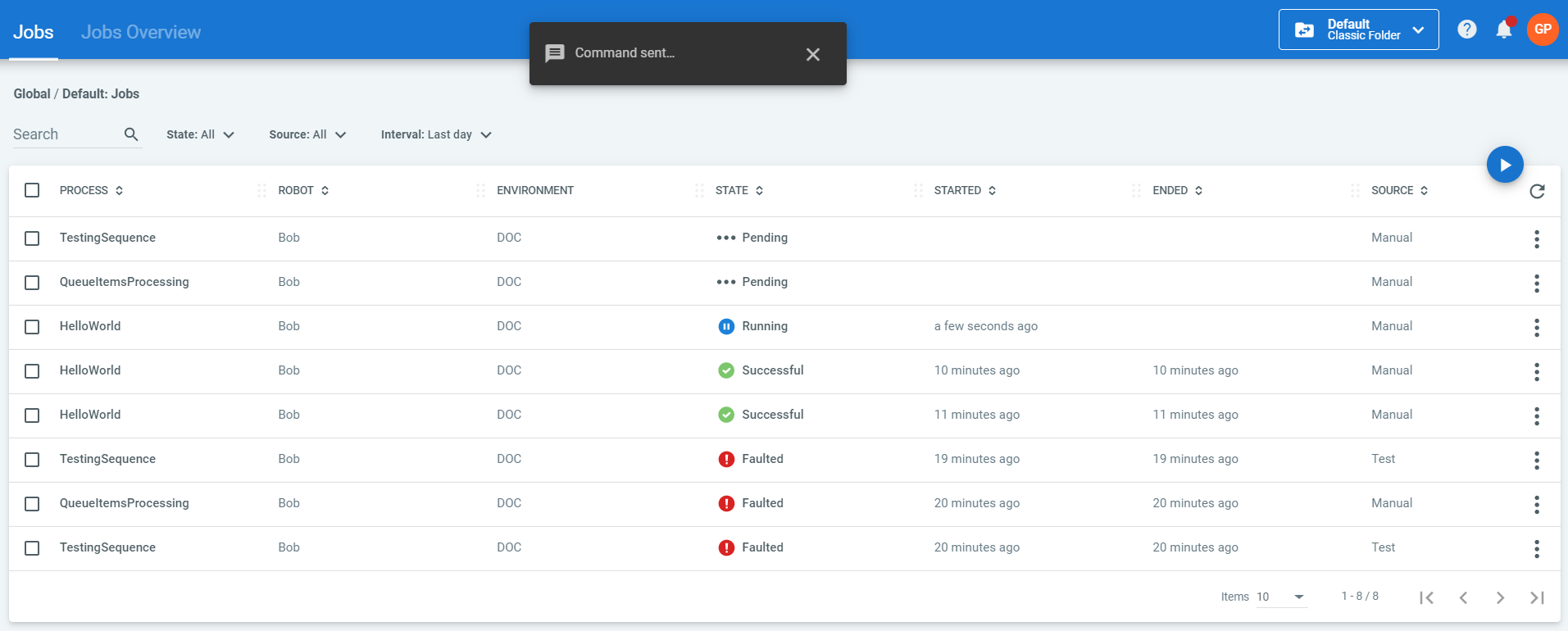

If you start multiple jobs on the same Robot, the first one is executed, while the others are placed in a queue, in a pending state based on their creation time. The Robot executes the queued jobs in order, one after the other.

For example, in the following screenshot, you can see that three different jobs were started on the same Robot. The first job is running, while the others are in a pending state.

If you start the same job on the same Robot multiple times, and the first job is not fully executed, only the second job is placed in a queue.

If you start a job on multiple Robots from the same machine that does not run on Windows Server, the selected process is executed only by the first Robot and the rest fail. An instance for each of these executions is still created and displayed in the Jobs page.

High-Density Robots

If you start a job on multiple High-Density Robots from the same Windows Server machine, it means that the selected process is executed by each specified Robot, at the same time. An instance for each of these executions is created and displayed in the Jobs page.

If you are using High-Density Robots and did not enable RDP on that machine, each time you start a job, the following error is displayed: “A specified logon session does not exist. It may already have been terminated.” To see how to set up your machine for High-Density Robots, please see the About Setting Up Windows Server for High-Density Robots page.

Long-Running Workflows

Note

This feature is only supported for Unattended environments. Starting a long-running process on an Attended Robot is not supported as the job cannot be killed from Orchestrator nor can it be effectively resumed.

Processes that require logical fragmentation or human intervention (validations, approvals, exception handling) such as invoice processing and performance reviews, are handled with a set of instruments in the UiPath suite: a dedicated project template in Studio called Orchestration Process, the UiPath.Persistence.Activities pack, tasks and resource allocation capabilities in Orchestrator.

Broadly, you configure your workflow with a pair of activities. The workflow can be parameterized with the specifics of the execution, such that a suspended job can only be resumed if certain requirements have been met. Only after the requirements have been met, resources are allocated for job resumption, thus ensuring no waste in terms of consumption.

In Orchestrator this is marked by having the job suspended, awaiting for requirements to be met, and then having the job resumed and executed as usual. Depending on which pair you use, completion requirements change and the Orchestrator response adjusts accordingly.

| Activities | Use Case |

|---|---|

| Jobs Start Job and Get Reference Wait for Job and Resume | Introduce a job condition, such as uploading queue items. After the main job has been suspended, the auxiliary job gets executed. After this process is complete, the main job is resumed. Depending on how you configured your workflow, the resumed job can make use of the data obtained from the auxiliary process execution. Note: If your workflow uses the Start Job and Get Reference activity to invoke another workflow, your Robot role should be updated with the following permissions: View on Processes View, Edit, Create on Jobs View on Environments. |

| Queues Add Queue Item and Get Reference Wait for Queue Item and Resume | Introduce a queue condition, such as having queue items processed. After the main job has been suspended, the queue items need to be processed through the auxiliary job. After this process is complete, the main job is resumed. Depending on how you configured your workflow, the resumed job can make use of the output data obtained from the processed queue item. |

| Tasks Create Form Task Wait for Task and Resume | Introduce user intervention conditions, found in Orchestrator as tasks. After the job has been suspended, a task is generated in Orchestrator (as configured in Studio). Tasks need to be validated by the assigned user, and only after their completion, the job is resumed. |

| Resume After Delay | Introduce a time interval as a delay, during which the workflow is suspended. After the delay has passed, the job is resumed. |

Job fragments are not restricted to being executed by the same Robot. They can be executed by any Robot that is available when the job is resumed and ready for execution. This also depends on the execution target configured when defining the job. Details here.

Example

I defined my job to be executed by specific Robots, say X, Y and Z. When I start the job only Z is available, therefore my job is executed by Z until it gets suspended awaiting user validation. After it gets validated, and the job is resumed, only X is available, therefore the job is executed by X.

- From a monitoring point of view, such a job is counted as one, regardless of being fragmented or executed by different Robots.

- Suspended jobs cannot be assigned to Robots, only resumed ones can.

To check the triggers required for the resumption of a suspended job, check the Triggers tab on the Job Details window.

Recording

For unattended faulted jobs, if your process had the Enable Recording option switched on, you can download the corresponding execution media to check the last moments of the execution before failure.

The Download Recording option is only displayed on the Jobs window if you have View permissions on Execution Media.

Updated 3 days ago